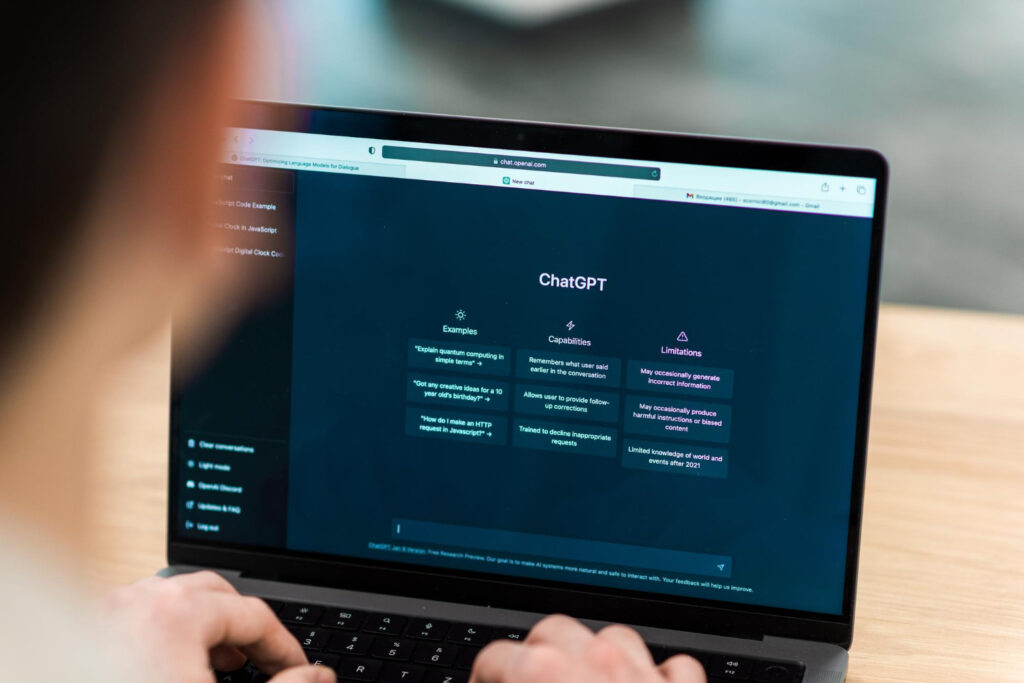

The growing use of ChatGPT involves not only taking advantage of the benefits, but also allowing the application to collect certain information from users. In fact, the company OpenAI stores various information from ChatGPT accounts in order to improve the service and personalize experiences. In this context, ESET, a leading proactive threat detection company, explains how to configure accounts correctly and what to pay attention to in order to protect data.

Courtesy

The type of information ChatGPT collects includes:

Account-specific data: within this category is the email information used for authentication, as well as the username. In the case of payment users, information regarding the payment method is stored. In addition, the preferences that the user has configured, such as language, subject, tastes and, if activated, the conversation history, are stored. From these, both prompts and responses obtained are stored in order to generate more profiled content and learn user preferences.

Technical information: the user’s IP address, which allows the user to be identified primarily for security purposes and to prevent abuse and impersonation. It should be noted that, through the IP, the user’s location can also be estimated. In addition, data is collected on the model of the device used and the browser where ChatGPT is running.

Usage data: ChatGPT not only stores information on the frequency and duration of use, but also on the functions used, such as navigation, code generation, images, etc. ChatGPT stores information when it is provided by users during their interactions, but the company does not store personal data outside of active sessions, unless the user so requests. Indeed, in any session, the user may request the deletion of the information if he or she so wishes.

“ChatGPT does not have direct access to personal and/or private information, but it remembers the information that the user provides during active sessions or even later if the user configures it that way. That is why the amount of information stored will depend on the user and the configuration of their sessions, although by default the information is saved session by session independently. It is clear that in the first instance it is the user who can filter what type of information they share”, says Fabiana Ramírez Cuenca, Computer Security Researcher at ESET Latin America.

Users often share private or sensitive information, such as financial data or credentials for different accounts. Although sharing data with the GPT model is not a risk in itself, since the information is only stored for that user and in that session, there is a risk of unauthorized access to a ChatGPT account that could expose the information. If this happens, a cybercriminal could access the conversation history and, consequently, learn any information shared by the user. As an example, in 2023, Gruop-IB detailed in a report how more than 100K ChatGPT accounts were being traded on the dark web.

“The severity of an account compromise can vary, as there are many associated risks. For example, the data obtained can be used for social engineering or identity theft attacks”, sas Ramírez Cuenca from ESET Latin America.

OpenAI generally stores data temporarily and for the sole purpose of improving the user experience. However, it does not keep conversation histories permanently stored (in the case of free users). In the case of paid users, the storage time can be determined by the user. All information is subject to rigorous security policies.

For data at rest, it uses AES-256 encryption, and for data in transit, it uses TLS 1.2 or higher protocols. In addition, user consent is required for the storage and processing of data. Users can review the policies, terms and conditions, which users voluntarily accept at the time of registration. Within the settings, users can choose privacy preferences. Furthermore, in compliance with data protection regulations, users may request the deletion and modification of the information stored about them and also consult and control it.

OpenAI generally stores data temporarily and for the sole purpose of improving the user experience. However, it does not keep conversation histories permanently (in the case of free users). In the case of paid users, the storage time can be determined by the user. All information is subject to rigorous security policies. For data at rest, it uses AES-256 encryption, and for data in transit, it uses TLS 1.2 or higher protocols.

In addition, user consent is required for the storage and processing of data. Users can review the policies, terms and conditions, which users voluntarily accept at the time of registration. Within the settings, users can choose their privacy preferences. On the other hand, in compliance with data protection regulations, users can request the deletion and modification of the information stored about them and also consult and control it.

To ensure account security in ChatGPT, ESET recommends applying various security measures, many of which can be configured within the application itself

Security settings: It is recommended to use strong passwords for accounts and activate two-factor authentication (2FA), if available, in order to prevent unauthorized access. It is important to change passwords periodically.

Data management and consent: Before sharing information in ChatGPT, it is advisable to review the privacy settings to understand what data is stored and how it is used, allowing informed consent to be granted.

Review active sessions: ChatGPT allows you to review active sessions, which is useful for detecting unusual activities.

Control of shared information: Avoid sharing sensitive information through prompts to prevent this data from being accessed in the event of improper access to ChatGPT.

Review terms and policies: It is advisable to constantly review the application’s terms and policies to learn about changes and new security options.

Use of secure devices: ChatGPT should only be accessed from devices protected with security solutions such as antimalware, as well as with operating systems updated to the latest versions to prevent, as far as possible, the exploitation of vulnerabilities that could open a possibility of access to ChatGPT.

Log out on shared devices: If ChatGPT is used on a public or shared device, it is recommended to log out after use. Report suspicious activities: If you detect unauthorized access attempts or unusual activities on the account, you should report it to OpenAI.

You may also be interested in

Beware of job phishing: the scam that uses fake dismissals to steal data